Learn about Confidential AI at our Open Confidential Computing Conference on Mar. 12!

With Privatemode and Tabby, you can run large AI models confidentially in the cloud – with the same interface used for your self-hosted LLMs.

Many Tabby users value local control and zero‑leakage workflows but are limited by the capabilities of self-hosted LLMs. Privatemode removes the trade‑off between capability and confidentiality by providing an OpenAI‑compatible, attested service that never exposes your data to cloud or service providers.

You get state‑of‑the‑art models with full control over your data, while Tabby's elegant interface makes Privatemode easier to use than ever. Download Tabby for Mac.

Integrating Privatemode into Tabby brings cloud-AI capabilities to your web AI interface while keeping your data confidential through end-to-end encryption and confidential computing. Plus, Privatemode is designed to never learn from your data.

With Privatemode, you can choose from state-of-the-art LLMs to power your workflows.

You can start using Privatemode in minutes. The setup is straightforward and fully compatible with Tabby.

If you don’t have a Privatemode API key yet, you can generate one for free here.

The proxy verifies the integrity of the Privatemode service using confindential computing-based remote attestation. The proxy also encrypts all data before sending and decrypts data it receives.

To install Tabby on macOS using Homebrew, run the command below. This will download and set up the Tabby CLI so you can run and manage the Tabby server:

Create the configuration directory and then generate the config file that tells Tabby how to connect to Privatemode AI.

To start Tabby with the configuration you just created, run the following command launching the Tabby server.

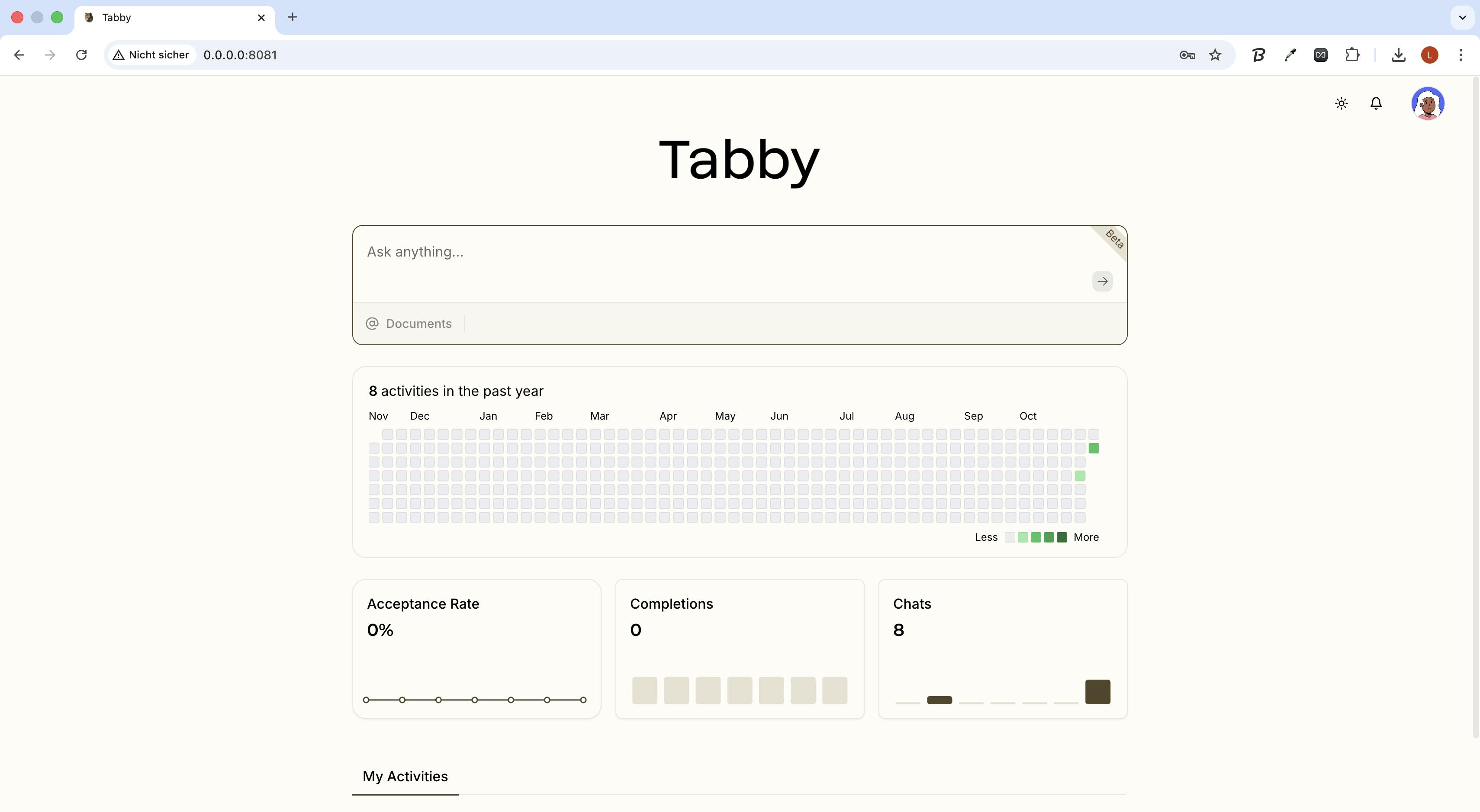

You've successfully integrated Confidential AI into Tabby. Now open this link in your browser and start your first confidential chat using Tabby and Privatemode AI.

© 2026 Edgeless Systems GmbH